Mythology in C/C++: Shifts are Expensive

|

|

Home |

|

Back To Tips Page |

|

Mythology in C/C++: Shifts are Expensive |

|

There are a large number of performance-related myths out there. Most of them are nonsense. They may not have been nonsense at one time in history, but, sadly, they have become Urban Legends, and the set of beliefs is propagated from one programmer to another, or from one Web site to another, without anyone even questioning them, let alone doing some experiments to prove or disprove the myths.

One common myth I've seen is that "You should not use multi-bit shift instructions, and you should never use a non-constant shift amount, because these operations are very expensive". I don't believe this. At one time, decades ago, when we programmed on the 8088, the ALU had a "serial shifter" that shifted one bit per CPU clock cycle. But in later years, these were replaced with what we knew as "barrel shifters", a shifter which can do a shift of any number of bits in constant time.

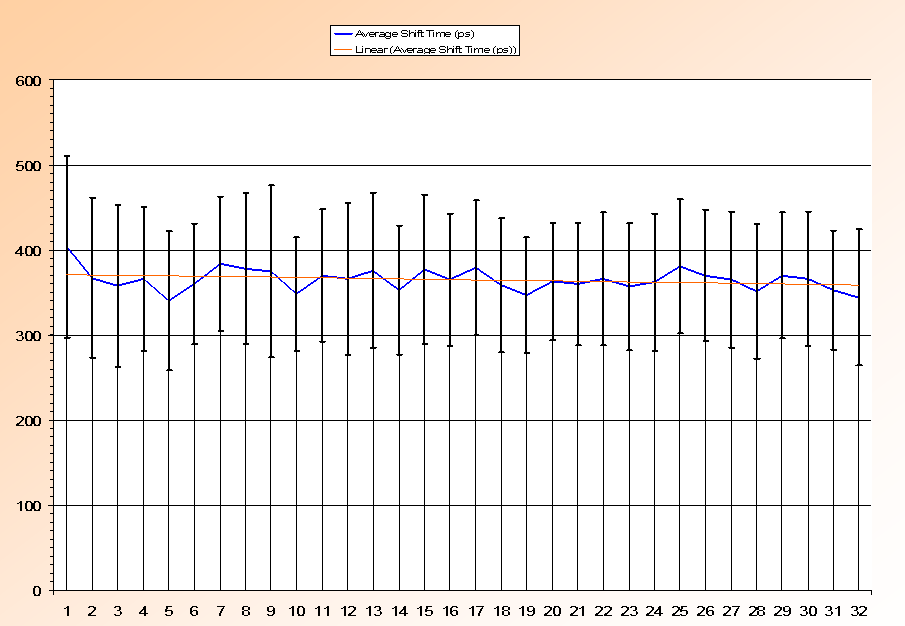

So I endeavored to prove this. I wrote a little test harness that would execute a shift instruction of any arbitrary amount, and then ran a series of tests shifting 1 bit, 2 bits, 3 bits, up to 31 bits. I wrote out the shift times, as a "CSV" (Comma-Separated Values) format file, loaded it up into Excel, and created a graph. Here's the result:

Note that the line is nearly flat, varying from about 350ps to about 400ps. The experiment was done by creating a function that took one argument, and was written in assembly code. I ran several tens of millions of tests and got a value which is the "baseline" cost. I then inserted a shift instruction (the baseline included time to load the shift count into the CL register), and ran several tens of millions of tests again. The difference between the code with no shift instruction and the code with a shift instruction was then plotted in the graph above, where the number of bits shifted is plotted on the x-axis and the time, in picoseconds, is plotted on the y-axis. Note that the slight variations are due to sampling errors and distortions due to operating system overhead. Note that there is no linear relationship between the shift distance and the amount of time; the average (as computed by Excel) is about 370ps (picoseconds), and the deviation for any value is no more than about 30ps.

The error bars are computed based on the actual data collected; note that all variance is well within the one-sigma error bars.

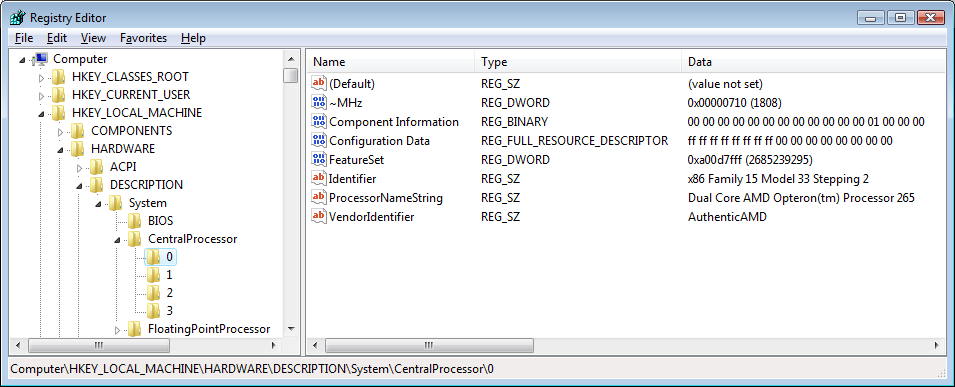

This test was run on a dual-chip dual-core AMD system with a nominal clock cycle time of 1.8GHz:

The basic clock cycle is 550ps, and the AMD machine, like the Intel machines, is a highly pipelined superscalar architecture with multilevel caches. This gives an average instruction dispatch time of 275ps (like the Intel machines, it dispatches two non-floating-point instructions per clock cycle). So the shift times are consistent with that range of clock times. But the important part of the test is that the curve, across the range of shift values, is nearly totally flat.

Shift time is constant, independent of distance. The myth that multi-bit shifts are expensive is BUSTED!

![]()

The views expressed in these essays are those of the author, and in no way represent, nor are they endorsed by, Microsoft.